The Australian Clinical Trials Alliance (ACTA) impact and implementation reference group has published guidance on clinical trial planning, design, conduct and reporting, to optimise the impact of late‐phase trials.1 It is critical that clinical trials are designed, executed and reported on so that the generated evidence can be implemented and applied to improve practice, health systems and policy (defined as implementability).1 This is particularly important for late‐phase trials as results from these trials guide stakeholder decisions on adoption or removal of candidate interventions from practice and policy. The results can also be used to guide implementation, potentially supplemented by other study types. In some cases, decisions on candidate interventions may be based on a single trial, although more commonly, they are based on the synthesis of evidence from multiple trials. If the results of positive late‐phase trials are not put into effect, the community benefit, impact and return on investment are lost. Therefore, to reduce wastage and optimise value to the community, who is both the funder and beneficiary of research, we need to maximise the impact of trials. This impact includes health and economic benefits and requires trials to be designed for implementability.2,3,4

Implementability and relationships with pragmatic and embedded trials

Implementability is key to trial design and delivery, independent of the results of the trial. Impact is dependent on intervention efficacy and implementability. Late‐phase trials should incorporate implementability and be designed to be useful to their diverse end users (including organisations, governments, clinicians and consumers).

Characteristics that contribute to implementability include concepts around trials that are embedded in clinical care and are pragmatic, focused on informing clinical practice and policy and considering real‐world investigators, recruitment, participants, intervention, delivery and outcomes. The PRECIS‐2 framework (PRagmatic Explanatory Continuum Indicator Summary framework) indicates where a trial sits on the spectrum between the explanatory “Can this intervention work under ideal conditions?” to the pragmatic “Does this intervention work under usual conditions?”. This framework highlights the vital need to identify, involve and co‐design or tailor trial design to the needs of end users.5 The Consolidated Framework for Implementation Research (CFIR) and similar tools are also useful to guide design and evaluation of implementation research.6,7 In implementability, key trial design considerations include eligibility and exclusion criteria, setting, requirement for a skilled workforce or specialised equipment and extent of data collection. Similarly, trial design contributes to both embedding and implementability; with the need to recognise potential differences between strategies to promote trial efficiency, and those to promote implementability of trial findings.

Best practice in trial planning, design, conduct and reporting

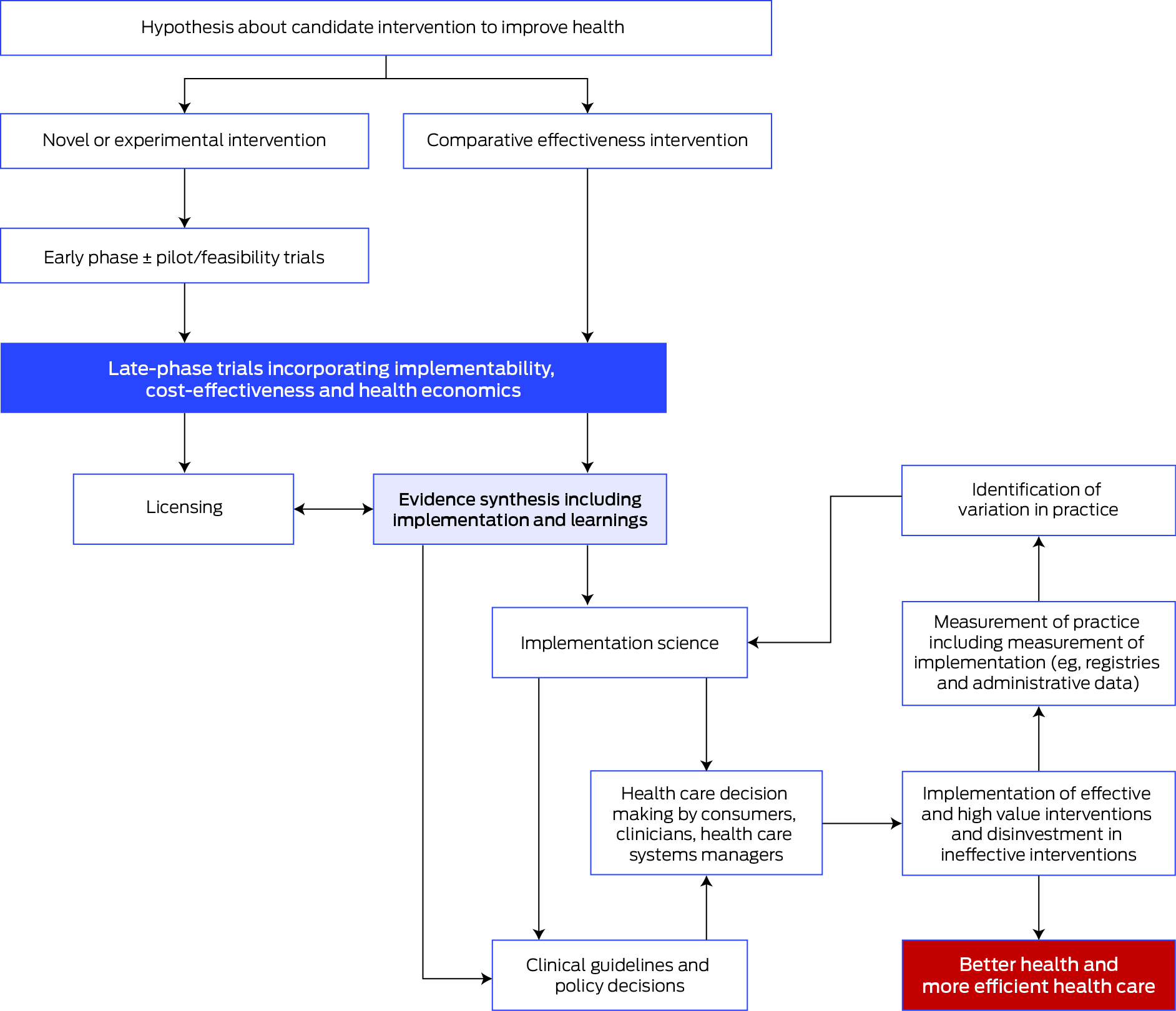

The important steps for progressing interventions through to implementation into practice, health services and policy are shown in the Box.

These steps align with the Australian Academy of Health and Medical Sciences report8 emphasising the importance of embedding research into health care, and the vital role of workforce and infrastructure in achieving this vision. The report also highlights the important role of National Health and Medical Research Council accredited research translation centres (RTCs) in implementation. The RTCs encompass most research, education and hospital services nationally and are well positioned to support clinical trial networks and enable implementation.9

Trial planning

Trials should be co‐designed with end users, including consumers, who have experience and understanding of current practice.10 It is acknowledged that in some circumstances opportunities for co‐design may be limited, such as in industry‐sponsored trials. Pre‐trial work with end users can establish relevance and the priority of research questions and appropriate outcome measures. Formal processes can be useful11 such as Delphi and nominal group techniques, although these need to be appropriate to the local context.

End points must be carefully chosen, including those of value to end users, and the minimum clinically important differences in end points considered. In addition, the minimum public health significant difference, which defines the relationship between a meaningful effect size and the public health disease burden, should be taken into account. For example, a major effect size (such as a cure for an inborn error of metabolism) in a rare disease may be just as important as minor effect sizes in common diseases (as this might cure a baby at birth and avoid lifetime costs and gain a lifetime of productivity).

The feasibility of intervention delivery should be established before a late‐phase trial. This can be assessed via a systematic review of existing evidence (which may establish the merit for implementation of a particular approach to treating a health problem), as well as pilot studies. Regulatory approvals or burden should also be considered for implementation of drug or device interventions, alongside required skills and resources.

Trial design and conduct

To optimise generalisability, consideration should be given to the population to which the results of the trial apply. The selected population should be as broad as possible, and entry criteria should be easily interpreted, using easily accessible information available to clinicians in routine practice, and participants in community‐based trials. If the candidate intervention is targeted to a clinical setting, trial sites should be broadly representative of the centres that provide care to the key population groups in which the intervention would be implemented post‐trial. For population‐based studies, community‐based recruitment and decentralised trial designs should be considered. If implementation will be beyond tertiary or quaternary hospitals, then the trial should be conducted to include this setting.

Inclusion criteria should be broad and exclusion criteria as limited as possible. Research burden and complexity from additional factors such as research‐only or largely unavailable biomarkers should be limited. The selected population should be diverse and representative of the population that the matter in question affects. Importantly, populations who experience inequities should be appropriately included through a range of strategies, including engaging with representatives from priority populations.

Interventions should be delivered in ways similar to current or future use in routine clinical practice. If interventions are designed to align with routine clinical practice, an intervention will likely be easier to embed and adopted more quickly. If an intervention has efficacy in a trial that has considered implementability, it is likely to be broadly effective when implemented into practice. For example, trialists should optimise opportunities for clinical staff (and not researchers) to deliver interventions. As reach and penetration are key to broader effectiveness, trials that can only recruit a small proportion of a target population are not recommended. Comparators, or the current standard of care (ie, an active control) where the investigator treats control participants as they would normally, should be included in trial protocols, as this offers the opportunity to provide a definitive end of trial recommendation.

End user consultation and strategies, such as run‐in periods, can assess challenges in adherence with the intervention. Protocols should include adjustments or titration that may be necessary to achieve efficacy or avoid adverse events and these should align with application in routine care. Ultimately, including diverse end users can inform embedding and pragmatic aspects related to implementability.

Other design considerations are concomitant care; strategies to increase reach (such as limited exclusion and inclusion criteria); analysis (intention‐to‐treat principles are recommended12); limiting trial burden to optimise and sustain participation (eg, by considering type of consent, requirements of participant follow‐up, and use of streamlined case report forms); embedding trials in a registry;13 considering and capturing factors that influence reach, adoption, fidelity, maintenance and process evaluation;14 and inclusion of health economic end points. A well designed, prospective health economic analysis may be critical to optimise implementation and will inform decision makers regarding investment.15,16,17 Economic analysis should also focus on cost‐effectiveness of interventions across different modelled scenarios, such as for specific population subgroups. This allows for the consideration of equity and provides policy makers with more nuanced investment options than simply offering “all or nothing” choices.

Trial reporting

Historically, a large proportion of studies go unpublished, or reporting lacks sufficient detail.18 This selective reporting increases the risk of publication bias and must be rectified. All trials must be reported in a timely manner, preferably within 12 months of their completion, and those reports must be readily accessible.19 This is vital if the trial has insufficient recruitment or is unable to deliver the intervention as it will still generate new knowledge on process, challenges and implementability, relevant to the trial and to implementation into practice. Detailed reporting on factors that affect implementation is important and consideration of an implementation protocol can optimise generated data and assist end users with implementing trial findings into practice. Interventions should be described in detail to enable replication, using tools such as the Template for Intervention Description and Replication (TIDieR) checklist.20 If the comparator was protocolised, details of the comparator and delivery methods should be clear. Open access publication should be prioritised to optimise reach to end users. Affordable open access options are needed to ensure equity and access.21 Additional dissemination tools, channels and strategies (such as implementation guides, evidence synthesis, guidelines, policy summaries, round tables, health professional and consumer tools) are key to implementation and should be co‐designed with end users. Authors should declare and maintain a record of real or perceived conflicts of interest and provide access to data where possible.

Importance of clinical trial networks

Many, but not all, clinical trials are completed by a network, with most members holding dual roles as researchers and clinicians. Many networks also include consumers, representative of end users. Consumer and community involvement (CCI) is fundamental, recommended or required by funders and considered good practice. This can include both formal or informal processes, across planning, design, conduct and reporting of trials. Early and embedded CCI in a structured, meaningful way within the network also enables appropriate implementation of trial outcomes. Networks can support quality CCI, as consumer engagement from the outset is prioritised by the NHMRC CCI statement. Tokenistic engagement with consumers should be avoided. ACTA, NHMRC and RTCs provide tools and resources to build capacity and support genuine CCI.22,23,24

Networks can provide access to representative trial sites aligned to clinical sites and populations where results are applicable. Some networks, potentially with an associated registry, are ideally positioned to monitor implementation (Box). Lastly, networks can facilitate the next steps in dedicated implementation research involving the systematic study of methods, strategies and pathways that support the application of trial findings into policy and practice. It is acknowledged that implementation science requires different but overlapping skills and expertise to that of clinical trials and that capacity building is warranted in both implementability and implementation science.

Final comments

To deliver on the investment and potential benefit of late‐phase clinical trials, it is important that end user‐informed implementability considerations are widely adopted and applied. Further information on best practice for the design, conduct and reporting of studies with a view to implementation can be found on ACTA's website (www.clinicaltrialsalliance.org.au/resource/6258/).

Box – Steps in the process from early clinical trials of a candidate intervention through to implementation in practice and policy leading to improved health outcomes

Source: Adapted from the Australian Clinical Trials Alliance Guidance on Implementability with permission.1

Provenance: Not commissioned; externally peer reviewed.

- 1. Australian Clinical Trials Alliance. Guidance on implementability. Dec 2019. https://clinicaltrialsalliance.org.au/wp‐content/uploads/2020/02/ACTA‐Guidance‐on‐Implementability‐Report.pdf (viewed Dec 2023).

- 2. Ioannidis JP. Why most clinical research is not useful. PLoS Med 2016; 13: e1002049.

- 3. Ford I, Norrie J. Pragmatic trials. N Engl J Med 2016; 375: 454‐463.

- 4. Macleod MR, Michie S, Roberts I, et al. Biomedical research: increasing value, reducing waste. Lancet 2014; 383: 101‐104.

- 5. Loudon K, Zwarenstein M, Sullivan F, et al. Making clinical trials more relevant: improving and validating the PRECIS tool for matching trial design decisions to trial purpose. Trials 2013; 14: 115.

- 6. Damschroder LJ, Aron DC, Keith RE, et al. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci 2009; 4: 50.

- 7. Damschroder LJ, Reardon CM, Opra Widerquist MA, Lowery J. The updated consolidated framework for implementation research based on user feedback. Implement Sci 2022; 17: 75.

- 8. Australian Academy of Health and Medical Sciences. Research and innovation as core functions in transforming the health system: a vision for the future of health in Australia [website]. https://aahms.org/video/vision‐report‐launch‐2022/ (viewed Aug 2024).

- 9. Teede H, Jones A. Advancing implementation of the Australian Academy of Health and Medical Sciences’ report ‐ Research and innovation as core functions in transforming the health system: A vision for the future of Australia. Australian Health Research Alliance, 2023. https://ahra.org.au/wp‐content/uploads/2023/03/AHRA‐response‐to‐AAHMS‐report.pdf (viewed Oct 2023).

- 10. National Health and Medical Research Council. Statement on consumer and community involvement in health and medical research. Australian Government, NHMRC. Sept 2016. https://www.nhmrc.gov.au/about‐us/publications/statement‐consumer‐and‐community‐involvement‐health‐and‐medical‐research.

- 11. The James Lind Alliance. The James Lind Alliance guidebook. Version 10. UK: National Institue for Health and Care Research, 2021.

- 12. Scott IA. Non‐inferiority trials: determining whether alternative treatments are good enough. Med J Aust 2009; 190: 326‐330. https://www.mja.com.au/journal/2009/190/6/non‐inferiority‐trials‐determining‐whether‐alternative‐treatments‐are‐good

- 13. Lasch F, Weber K, Koch A. Commentary: on the levels of patient selection in registry‐based randomized controlled trials. Trials 2019; 20: 100.

- 14. Minary L, Trompette J, Kivits J, et al. Which design to evaluate complex interventions? Toward a methodological framework through a systematic review. BMC Med Res Methodol 2019; 19: 92.

- 15. Tuffaha HW, Gordon LG, Scuffham PA. Value of information analysis informing adoption and research decisions in a portfolio of health care interventions. MDM Policy Pract 2016; 1: 2381468316642238.

- 16. Tuffaha HW, Roberts S, Chaboyer W, et al. Cost‐effectiveness and value of information analysis of nutritional support for preventing pressure ulcers in high‐risk patients: implement now, research later. Appl Health Econ Health Policy 2015; 13: 167‐179.

- 17. Mewes JC, Steuten LMG, IJsbrandy C, et al. Value of implementation of strategies to increase the adherence of health professionals and cancer survivors to guideline‐based physical exercise. Value Health 2017; 20: 1336‐1344.

- 18. Riveros C, Dechartres A, Perrodeau E, et al. Timing and completeness of trial results posted at ClinicalTrials.gov and published in journals. PLoS Med 2013; 10: e1001566.

- 19. National Health and Medical Research Council, NHMRC open access policy. Australian Government, NHMRC. Nov 2023. https://www.nhmrc.gov.au/about‐us/resources/nhmrc‐open‐access‐policy (viewed Oct 2023).

- 20. Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014; 348: g1687.

- 21. Smith AC, Merz L, Borden JB, et al. Assessing the effect of article processing charges on the geographic diversity of authors using Elsevier's “Mirror Journal” system. Quantitative Sci Studies 2021; 2: 1123‐1143.

- 22. Australian Clinical Trials Alliance. Strengthening consumer engagement in clinical trials [website]. https://clinicaltrialsalliance.org.au/group/strengthening‐consumer‐engagement‐in‐developing‐conducting‐and‐reporting‐clinical‐trials/ (viewed Dec 2023).

- 23. Monash Partners Academic Health Science Centre. Consumer and community involvment [website]. https://monashpartners.org.au/education‐training‐and‐events/cci/ (viewed Dec 2023).

- 24. Australian Health Research Alliance. Consumer and community involvement [website]. https://ahra.org.au/our‐work/consumer‐and‐community‐involvement/ (viewed Aug 2024).

Open access:

Open access publishing facilitated by Monash University, as part of the Wiley ‐ Monash University agreement via the Council of Australian University Librarians.

We acknowledge funding from the Medical Research Future Fund to the Australian Clinical Trial Alliance. We thank Belinda Butcher from WriteSource Medical for assistance with this manuscript and Judith Trotman for her contribution to this manuscript. Belinda Butcher was funded by the Australian Clinical Trials Alliance.

Samantha Keogh reports monies received by her employer Queensland University of Technology from BD Medical and ITL Biomedical for educational consultancies not related to this study. Paul Cohen reports speaker's honoraria from Seqirus and AstraZeneca, stock and advisory board participation in Clinic IQ. David Johnson has received consultancy fees, research grants, speaker's honoraria and travel sponsorships from Baxter Healthcare and Fresenius Medical Care, consultancy fees from Astra Zeneca, Bayer, and AWAK, speaker's honoraria from ONO and Boehringer Ingelheim and Lilly, and travel sponsorships from ONO and Amgen. All other authors declare no relevant disclosures.