Abstract

Objective: To develop a model to measure potentially inappropriate care in Australian hospitals.

Design: Secondary analysis of computerised hospital discharge data for all Australian hospitals for the 2010–11 financial year.

Main outcome measure: Hospital-specific incidence of selected diagnosis–procedure pairs identified as inappropriate in other literature.

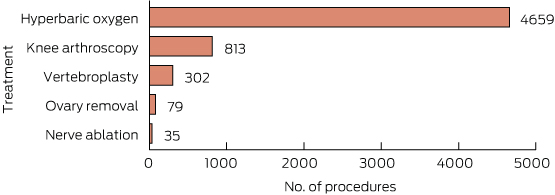

Results: Five hospital procedures that are not supported by clinical evidence happened more than 100 times a week, on average. The most frequent of these do-not-do treatments was hyperbaric oxygen therapy for a range of specific conditions (4659 admissions in 2010–11). The rate of do-not-do procedures varied greatly, even among comparator hospitals that provided the procedure and that treated the relevant patient group. Among comparator hospitals, an average of 3.3% of patients with osteoarthritis of the knee received arthroscopic lavage and debridement of the knee (a do-not-do treatment), but four hospitals had rates of over 20%. There was also great variation in hospital-specific rates of procedures that should not be done routinely.

Conclusion: Hospital-specific rates of do-not-do treatments vary greatly. Hospitals should be informed about their relative performance. Hospitals that have sustained, high rates of do-not-do treatments should be subject to external clinical review by expert peers.

Identifying when the wrong treatments are chosen, and putting a stop to it, is an important way to improve the quality of health care. There are many ways to do this. Strategies include using clinical guidelines or decision support systems, clinician education, clinical engagement, peer review, and adjusting the pricing, funding and availability of individual treatments. All of these methods can be useful. But there are currently few concerted efforts to evaluate and benchmark treatment choices at the hospital level, and to use this information to drive improvement.

Inappropriate care is a longstanding concern in health policy. For over 40 years, small-area analyses have shown significant geographic variation in the rates at which different subpopulations are given common surgical procedures. 1,2 The Organisation for Economic Co-operation and Development has recently published this kind of analysis, including a chapter on Australia showing the rates of nine types of hospital admission in different Medicare Local areas, adjusted for age and sex.3 A more detailed atlas of variation and supporting studies has also been published by the Australian Commission on Safety and Quality in Health Care.4

Wide variation in practice patterns has been attributed to clinicians interpreting evidence and guidelines inconsistently, with patients in different areas being under- and overtreated as a result.

A newer but burgeoning body of literature has looked at inappropriate care in a different way, focusing on the interventions themselves rather than their usage. This focus has led to the development of lists of treatments for disinvestment, either because they have not been proven effective, or because testing has shown that they are ineffective or inferior to a substitute treatment.

Both of these approaches to inappropriate care have shortcomings. Small-area variation analysis has uncovered disturbing variation in patterns of care, but has not produced meaningful policy or practice change.5 This is probably owing to the fact that variation analyses rarely take into account legitimate drivers of clinical variation such as patient morbidity and patient preferences, and so are unable to convincingly differentiate warranted from unwarranted variation.6,7

Although disinvestment work has produced much more compelling evidence of inappropriate care, it has struggled to achieve meaningful policy change. As this study shows, clinicians can continue to use a treatment long after it has been declared inappropriate.

There are few interventions that are ineffective for all patients and indications. Typically, the value of a treatment varies for different types of patients. This complicates measurement of inappropriate care and the development of policies to reduce it. Across-the-board funding cuts, or even funding cuts among specific subgroups of patients, may ignore clinical heterogeneity and deny funding for valuable, as well as ineffective, care.8 A patient may have characteristics that typically rule out a treatment, but have other characteristics that mean they are not well represented in clinical trial samples, or are not eligible for treatments that are recommended as more effective. Approaches are emerging that may help health care organisations and clinicians to distinguish, at the site of care, between patients for whom a certain treatment is warranted and those for whom it is not.8 But in many cases the coded data do not capture all of the relevant clinical variation. This presents a serious challenge to measuring and comparing clinical choices in different regions or among providers.

We sought to develop a practical way to identify which hospitals are most likely to be choosing inappropriate treatments. Our methods draw on the analytical strengths of variation and disinvestment analysis. From disinvestment analysis, we took a selection of treatments that evidence clearly shows should not be done routinely, or at all. From variation analysis, we focused on outlier hospitals — those providing a “do not do” or “do not do routinely” treatment at rates that are far in excess of the national average.

This approach overcomes several key deficiencies of variation and disinvestment research. First, focusing on procedures listed for disinvestment means that high usage rates can be convincingly linked to inappropriate practice. This is not the case in variation analysis, where the procedures analysed are generally considered effective, and where high relative usage in one area can be due to underprovision in other areas.

Second, using variation to focus on providers with high rates of potentially inappropriate care is a practical way to pursue disinvestment. Rather than advocating the removal of clinician discretion with respect to the procedures analysed, this approach advocates monitoring, with priority given to the providers where care is clearly out of step with both clinical evidence and standard practices, and therefore very likely to be inappropriate.

Third, focusing on hospitals rather than geographic areas allowed us to correct for several major deficiencies in other variation analyses. Focusing on hospitals (and specific specialties within those hospitals) allows for analysis of microcultures of care, which are likely to be obscured when practice patterns are aggregated to a regional level. Further, geographically aggregated analyses generally only make crude adjustments for patient morbidity differences, while our use of rich patient-level data allowed us to correct for variation in morbidity to a much greater extent. Finally, there are few viable policy options for dealing with variation at the geographic level. Developing strategies aimed at hospital-level accountability for practice patterns may be more productive in addressing inappropriate care.

Methods

Data

De-identified patient-level data about all public and private hospital separations (discharge, deaths and transfers) for the financial year 2010–11 were obtained from the Australian Institute of Health and Welfare after approval by each state and territory. The dataset included 8 720 771 records from 709 separate public hospital sites in all states except the Australian Capital Territory (private hospitals in each state were all grouped with a single code). Data were released as one record per admission, so it was not possible to link records to derive data on a per-person basis. Names of public hospitals were suppressed as part of the approval process.

Approval from an ethics committee was not required, but data confidentiality requirements were imposed as part of data release.

Selecting treatments for assessment

Potentially ineffective treatments were drawn from published lists of, or recommendations about, inappropriate care. These include a list of procedures identified as potential disinvestment candidates,9 a list of procedures where there had been a “reversal of evidence” — that is, where subsequent evidence had shown that early treatment recommendations were no longer appropriate10 — and examination of decisions of two national health technology assessment bodies: the Medical Services Advisory Committee in Australia and the National Institute for Health and Care Excellence in England. Only guidance published before our data period (2010–11) was used.

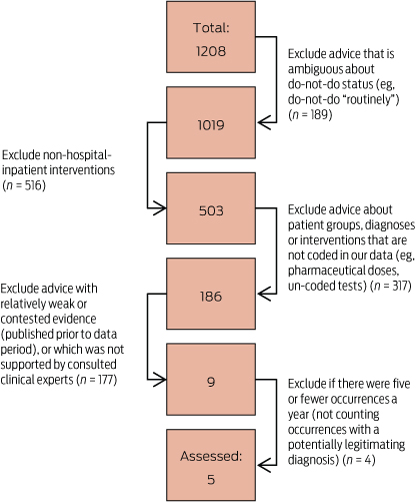

To select do-not-do treatments for analysis, these lists were whittled down by excluding recommendations that were vaguely expressed and treatments that were unable to be reliably coded using the International Classification of Diseases Australian Modification diagnosis and procedure codes or did not take place in hospitals. A further filter excluded treatments for which the evidence originally cited was weak or was contradicted by subsequent evidence, or was not supported by clinical experts we consulted. Finally, since we are investigating variation, we excluded cases with five or fewer occurrences and combined overlapping advice (Box 1). At each of these steps we took a conservative approach to reduce the chance that our analysis — a proof of concept — would be rejected based on the examples we used. We also looked at examples of treatments with recommendations against being performed “routinely”, but these were selected opportunistically (as with do-not-do advice, the evidence was evaluated and clinical experts were consulted).

Do-not-do and do-not-do-routinely advice was expressed as “do not (routinely) do procedure x for diagnosis y”. Clinical experts reviewed those cases which had multiple diagnoses listed to ensure that none of the additional diagnoses or procedures might provide a justification for the do-not-do (or do-not-do-routinely) procedure. Coding assignment of diagnoses and procedures was reviewed by an independent health information manager.

The filtering process excluded practically all of the original candidates of potentially inappropriate care. From the original lists, only five procedures were clearly potentially inappropriate and could be analysed in the data (Box 2). From the many do-not-do-routinely procedures, three were selected as exemplars.

Measuring use of the selected treatments

To look at variation in a fair and meaningful way, we measured the proportion of patients in a hospital who have the relevant diagnosis (such as osteoarthritis of the knee) who also received the do-not-do procedure (such as arthroscopic lavage or debridement). This partly addresses a criticism of the geographic research that it does not standardise adequately for differences in underlying rates of disease.

With this metric, we only compared hospitals that are able to provide the do-not-do treatment. These hospitals both perform the relevant procedure and treat patients (> 5) with the relevant morbidity.

Results

Incidence of each of the five do-not-do procedures is shown in Box 3.

The incidence of the five identified do-not-do treatments was quite low, with a total of 5888 procedures identified in the dataset. While this was a very small proportion of all patients, it is 4.5 per cent of all patients receiving the relevant procedures (or in one case, combination of procedures). It should be noted that this is a lower bound as we did not measure compliance with all do-not-do guidance for these procedures.

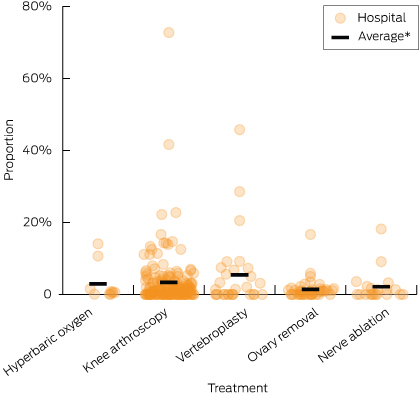

Among hospitals that perform the relevant procedure and also treat patients (> 5) with the relevant morbidity, we found that the incidence of the do-not-do procedures was highly variable (Box 4).

For all the do-not-do treatments, the outliers with the highest rates were a long way from the average. There were 25 hospital departments that provided a do-not-do treatment more than three times as often as the average hospital (within hospital comparator groups). Eight hospital departments provided do-not-do treatments at over five times the average rate, while three departments did so at over 10 times the national rate.

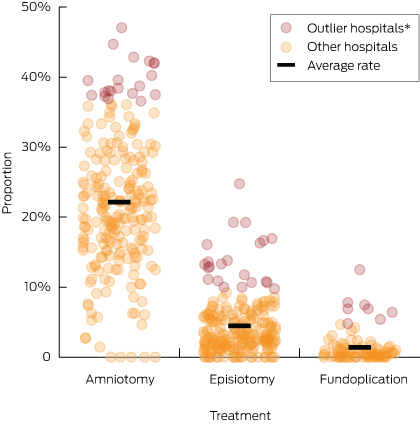

Again, we saw substantial variability in hospital procedure rates for the procedures that should not be done routinely (Box 5), with some hospitals clear outliers. The hospitals with the highest rates for the three do-not-do-routinely treatments offered them at more than nine times, six times and double the average rate.

Discussion

This study is the first attempt to quantify the extent of inappropriate care in Australian hospitals for a range of conditions using routine data. We have shown that it is possible to use routine hospital data to identify the incidence of potentially inappropriate care.

Importantly, the procedures used here as examples have either been shown in academic studies to be inappropriate or are recommended against in guidelines, or both. What we have shown is that, despite this advice, and even defunding in the Medicare Benefits Schedule, the procedures are still being performed. Guidelines and funding policies are clearly not sufficient to solve this problem.

Limitations

This study has a number of limitations. First, it cannot be a basis for generalising about the overall incidence of inappropriate care in Australian hospitals. We used a small, non-representative sample of hospital procedures and analysed their incidence in a single year. Because inappropriate care appears to be relatively infrequent, there may be instability in the incidence of our indicator conditions.

Second, the inappropriate care identified in this study can only be considered potentially (rather than definitively) inappropriate. Some of the identified inappropriate treatments may be coding errors or may be justified on the basis of a rare combination of patient characteristics. Routine data can only identify what is occurring, not why. We therefore suggest that the indicator be labelled “potentially inappropriate care”, although the procedures themselves would remain indicators of, prima facie, inappropriate care.

Third, we were not able to analyse rates of inappropriate care in individual private hospitals.

Fourth, we were unable to link data across settings or time. A person who had multiple treatments, one of which was a do-not-do treatment, would thus be counted once in the numerator and multiple times in the denominator. This makes our prevalence estimates conservative.

What should be done to ensure safe and effective care?

Identifying potentially inappropriate practice is irrelevant unless action occurs. The steps outlined below will allow governments to monitor more and ultimately reduce inappropriate care in Australian hospitals.

First, forms of inappropriate care should be identified and communicated in a more consistent and accessible way. This should be a role for the Australian Commission on Safety and Quality in Health Care or the health productivity and performance commission foreshadowed in the 2014 federal Budget to take over functions from the Commission together with a number of other performance-related national authorities. Such a Commission should, with clinical involvement, identify additional procedures that represent potentially inappropriate care. It might draw on parallel efforts such as NPS MedicineWise’s Choosing Wisely program and the Royal Australasian College of Physician’s EVOLVE initiative. The Commission should maintain a centralised, easily accessible and continuously updated list of questionable procedures for clinicians to use.

Second, existing data should be used to measure and benchmark a wider range of inappropriate care. In this study, data access agreements meant we could only analyse a handful of do-not-do treatments. Many more can be analysed using additional sources of clinical guidance and data that the Australian government already possesses, and that the Commission should have access to. Studies of geographic variation have linked patient data across datasets and over time.23 Linkage of this kind could allow the Commission to do more; for example, analysing inappropriate use of treatments that should not be first-line interventions. It could also link patient records across different parts of the health system to cover hospitals, the Pharmaceutical Benefits Scheme and the Medicare Benefits Scheme.

Third, the Commission should advise states and hospitals about their rates of questionable care. States should give outlier hospitals a chance to improve, but if high rates persist there should be an external clinical review. The reviews should investigate all aspects of clinical decision making in the relevant department or specialist area. This would include confirming that the data accurately recorded the treatments that were chosen and assessing whether these choices were clinically valid.

States should prioritise investigation of hospitals with high rates of potentially inappropriate care, both for practical reasons and because low rates may reflect cases where most procedures were actually legitimate. States should also consider the volume of patients with the relevant morbidity. Our cut-off (> 5) could result in investigations that affect the care of very few people. Choosing thresholds that trigger investigations is ultimately a normative decision. It involves a trade-off of unnecessary investigatory burden and waste of resources against potential clinical risks to patients associated with inappropriate care.

Ultimately, patients should have a reasonable expectation of receiving appropriate care. Our study has shown that procedures that are contrary to contemporary clinical evidence are being done in Australia, and that some hospitals seem to provide a very high rate of these procedures. These hospitals should be alerted to the fact of their aberrant practice and be subject to clinical review if that practice continues, helping to ensure that hospital care in Australia is evidence-based, effective and safe.

2 Potentially inappropriate procedures

- Vertebroplasty for painful osteoporotic vertebral fractures11,12

- Arthroscopic lavage or debridement for osteoarthritis of the knee13,14

- Laparoscopic uterine nerve ablation for chronic pelvic pain15

- Removing healthy ovaries during a hysterectomy16

- Hyperbaric oxygen therapy for a range of conditions including osteomyelitis, cancer, non-diabetic wounds and ulcers, skin graft survival, Crohn’s disease, tinnitus, Bell’s palsy, soft tissue radionecrosis, cerebrovascular disease, peripheral obstructive arterial disease, sudden deafness and acoustic trauma, and carbon monoxide poisoning17-19

4 Distribution of proportion of relevant patients receiving a do-not-do treatment in public hospitals with capacity to perform the do-not-do treatment, 2010–11

*Average rates among comparator hospitals: hyperbaric oxygen therapy (2.9%); arthroscopic lavage or debridement of the knee (3.3%); vertebroplasty (5.4%); oophorectomy (1.4%); uterine nerve ablation (2.1%).

Received 9 January 2015, accepted 28 May 2015

- Stephen J Duckett

- Peter Breadon

- Danielle Romanes

- Grattan Institute, Melbourne, VIC

No relevant disclosures.

- 1. Wennberg J, Gittelsohn A. Small area variations in health care delivery. Science 1973; 182: 1102-1108.

- 2. Wennberg JE. Forty years of unwarranted variation — and still counting. Health Policy 2014; 114: 1-2.

- 3. Organisation for Economic Co-operation and Development. Geographic variations in health care: what do we know and what can be done to improve health system performance? OECD Health Policy Studies. Paris: OECD Publishing, 2014.

- 4. Australian Commission on Safety and Quality in Health Care, Australian Institute of Health and Welfare. Exploring healthcare variation in Australia: analyses resulting from an OECD study. Sydney: ACSQHC, 2014.

- 5. Evans RG. The dog in the night-time: medical practice variations and health policy. In: Andersen TF, Mooney G, editors. The challenges of medical practice variations. London: MacMillan Press, 1990: 117-152.

- 6. Mercuri M, Gafni A. Medical practice variations: what the literature tells us (or does not) about what are warranted and unwarranted variations. J Eval Clin Pract 2011; 17: 671-677.

- 7. Goodman DC. Unwarranted variation in pediatric medical care. Pediatr Clin North Am 2009; 56: 745-755.

- 8. Elshaug AG, McWilliams JM, Landon BE. The value of low-value lists. JAMA 2013; 309: 775-776.

- 9. Elshaug AG, Watt AM, Mundy L, Willis CD. Over 150 potentially low-value health care practices: an Australian study. Med J Aust 2012; 197: 556-560. <MJA full text>

- 10. Prasad V, Vandross A, Toomey C, et al. A decade of reversal: an analysis of 146 contradicted medical practices. Mayo Clin Proc 2013; 88: 790-798.

- 11. Kallmes DF, Comstock BA, Heagerty PJ, et al. A randomized trial of vertebroplasty for osteoporotic spinal fractures. N Engl J Med 2009; 361: 569-579.

- 12. Buchbinder R, Osborne RH, Ebeling PR, et al. A randomized trial of vertebroplasty for painful osteoporotic vertebral fractures. N Engl J Med 2009; 361: 557-568.

- 13. Moseley JB, O’Malley K, Petersen NJ, et al. A controlled trial of arthroscopic surgery for osteoarthritis of the knee. N Engl J Med 2002; 347: 81-88.

- 14. Kirkley A, Birmingham TB, Litchfield RB, et al. A randomized trial of arthroscopic surgery for osteoarthritis of the knee. N Engl J Med 2008; 359: 1097-1107.

- 15. National Institute for Health and Clinical Excellence. Laparoscopic uterine nerve ablation (LUNA) for chronic pelvic pain. London: NICE, 2007. (NICE Interventional Procedure Guidance 234.)

- 16. National Institute for Health and Care Excellence. Heavy menstrual bleeding. London: NICE, 2007. (NICE Clinical Guideline 44.)

- 17. Medical Services Advisory Committee. Hyperbaric oxygen therapy. Canberra: MSAC, 2000. (MSAC applications 1018-1020. Assessment report.)

- 18. Medical Services Advisory Committee. 1054 - Hyperbaric oxygen therapy for the treatment of non-healing, refractory wounds in non-diabetic patients and refractory soft tissue radiation injuries. MSAC, 2003.

- 19. Medical Services Advisory Committee. Reconsideration of Application 1054.1: Hyperbaric oxygen treatment (HBOT) for non-diabetic chronic wounds. MSAC, 2012.

- 20. National Institute for Health and Care Excellence. Dyspepsia: management of dyspepsia in adults in primary care. (NICE Clinical Guideline 17.) London: NICE, 2004.

- 21. National Institute for Health and Care Excellence. Intrapartum care: care of healthy women and their babies during childbirth. (NICE Clinical Guideline 55.) London: NICE, 2007.

- 22. National Institute for Health and Care Excellence. Induction of labour. (Clinical Guideline 70.) London: NICE, 2008.

- 23. Schwartz AL, Landon BE, Elshaug AG, et al. Measuring low-value care in Medicare. JAMA Intern Med 2014; 174: 1067-1076.

Ian AHarris

Using MBS data alone (which does not include public patients in public hospitals or compensation scheme patients) we found that there were nearly 60,000 knee arthroscopies performed in the 2010-2011 financial year. It is known that most knee arthroscopies are done for degenerative conditions for which the procedure is of no benefit compared to placebo, with approximately 60% of procedures occurring in patients aged 45 or older. We have found the diagnostic coding to be unreliable, and the restriction to a diagnosis of osteoarthritis, and perhaps restricting the procedure codes, might explain the total number of 813 knee arthroscopies in one year.

By reducing the number of knee arthroscopies from 60,000 to 813, we feel that the extent of the problem has been vastly underestimated and the variation shown is more likely to reflect non-clinical factors such as diagnosis and procedure coding variations.

If the number of 813 unnecessary arthroscopies was correct, we would feel that the problem had been virtually solved. Unfortunately, we estimate the number to be much higher.

1. Buchbinder R, Harris IA, Sprowson A: Management of degenerative meniscal tears and the role of surgery. BMJ 2015, 350:h2212.

2. Buchbinder R, Richards B, Harris I: Knee osteoarthritis and role for surgical intervention: lessons learned from randomized clinical trials and population-based cohorts. Current Opinion in Rheumatology 2014, 26(2):138-144.

Competing Interests: No relevant disclosures

Prof Ian AHarris

UNSW

Stephen Duckett

Our result may only identify the tip of the iceberg, but that is a great place to start looking for ice. If some shows above the waterline, there might be much more below.

We argued that hospitals providing do-not-do arthroscopies at above the average rate should have their practices reviewed. When an expert clinical team investigates the use of arthroscopies in those hospitals, their review could look at arthroscopies in general, not just the narrow do-not-do category that we used. These reviews could have a big impact on care. The hospitals with above average rates of do-not-do arthroscopies provide one quarter of all arthroscopies (these figures are for public hospitals only).

In most cases, it takes more than routine hospital data to evaluate treatment choices conclusively. The data can identify hospitals that almost certainly have some poor clinical decision-making, and which may have much more. By focusing on the most reliable red flag for questionable care, clinical reviews have the best chance of improving care and protecting patients from unnecessary treatments.

* Ibrahim, J. E. (2015). It is not appropriate to dismiss inappropriate care. The Medical journal of Australia, 203(4), 161

Competing Interests: No relevant disclosures

Dr Stephen Duckett

Grattan Institute

Sanjay Sharma

The study has over-representation of inappropriate care in patients receiving hyperbaric oxygen therapy, and we would like to draw attention to the fact that inappropriate care occurs in various other medical disciplines. There are numerous reports of inappropriate coronary interventions (3) and endoscopies (4).

Another area of patient care in the hospitals that goes unnoticed or unreported is inappropriate pathology and radiology testing. In one meta-analysis mean rates of over- and under-utilization of laboratory testing were reported to be 20.6% and 44.8% respectively (5). Radiological imaging is often an over-utilised modality in patient care that not only adds to the cost but also exposes patients to unnecessary radiation. The American College of Radiology (ACR) published appropriateness criteria in 2006 (6). In a subsequent study Andre et al demonstrated that there is low utilization of the ACR appropriateness criteria by clinicians when ordering imaging studies for their patients (7).

Hopefully, this article will generate interest in this domain and there will be more studies to look at other areas of inappropriate patient care in our hospitals.

References:

1. Duckett SJ, Breadon P and Romanes D. Identifying and acting on potentially inappropriate care. Med J Aust 2015; 203: 183e.1 - 183e.6

2. Ibrahim JE. It is not appropriate to dismiss inappropriate care. MJA 2015; 203(4): 161-162

3. Scott IA, Harden H and Coory M. What are appropriate rates of invasive procedures following acute myocardial infarction? A systematic review. MJA 2001; 174(3): 130-136

4. Juillerat P et al. EPAGE II. Presentation of methodology, general results and analysis of complications ... Endoscopy 2009; 41: 240 – 246

5. Zhi M et al. The Landscape of Inappropriate Laboratory Testing: A 15-Year Meta-Analysis. PLoS ONE 2013; 8(11): e78962

6. Bettmann M. The ACR appropriateness criteria: view from the committee chair. J Am Coll Radiol 2006; 3: 510–512

7. Andre B, et al. Do Clinicians Use the American College of Radiology Appropriateness Criteria in the Management of Their Patients? American Journal of Roentgenology. 2009; 192: 1581-1585

Competing Interests: No relevant disclosures

Assoc Prof Sanjay Sharma

Ballarat Health Services